|

|

| ORIGINAL ARTICLE |

|

| Year : 2015 | Volume

: 1

| Issue : 1 | Page : 26-30 |

|

Life Skills Training Programs and Empowerment of Students in Schools: Development, Reliability and Validity of Context, Input, Processes, and Product Evaluation Model Scale

Shervin-sadat Hashemian-Esfahani1, Arash Najimi2, Ahmadreza Zamani1, Ali-asghar Asadollahi Shahir3, Nahid Geramian4, Parastoo Golshiri1

1 Department of Community Medicine, School of Medicine, Isfahan University of Medical Sciences, Isfahan, Iran

2 Department of Health Education and Health Promotion, School of Health, Isfahan University of Medical Sciences, Isfahan, Iran

3 Department of Health Insurance, Health Insurance Organization, Gorgan, Golestan, Iran

4 Department of Mental Health, Provincial Health Center, Isfahan University of Medical Sciences, Isfahan, Iran

| Date of Web Publication | 10-Aug-2015 |

Correspondence Address:

Dr. Parastoo Golshiri

Department of Community Medicine, School of Medicine, Isfahan University of Medical Sciences, Isfahan

Iran

Source of Support: None, Conflict of Interest: None  | Check |

Induction: This study aimed to investigate the reliability and validity of the evaluation scale based on context, input, processes, and product (CIPP) evaluation model, within the framework of life skills education programs in schools of Isfahan (Iran) was performed.Methods: Researcher designed scale of the study was prepared with regard to the objectives of the program based on the checklists of CIPP evaluation model. The primary scale had 43 items. Face validity and content validity of the scale were examined using a panel of experts. Ultimately, the scale was conducted in a group of people participating in the program. Exploratory factor analysis was used to evaluate the construct validity, and Cronbach's alpha was used to measure internal consistency. Results: Content validity index of the scale was 0.84. The results of the factor analysis indicated that the CIPP evaluation model scale consisted of four factors: CIPP and included 34 items. Reliability confident was calculated 0.87 for the whole scale and 0.82-0.93 for four factors. Conclusion: The results of the study showed that the scale based on the CIPP evaluation model as a valid and reliable instrument can be used in the evaluation of life skills training program. Keywords: Context evaluation, input evaluation, input, life skills, process evaluation, product evaluation, processes and product model the context

How to cite this article:

Hashemian-Esfahani Ss, Najimi A, Zamani A, Shahir AaA, Geramian N, Golshiri P. Life Skills Training Programs and Empowerment of Students in Schools: Development, Reliability and Validity of Context, Input, Processes, and Product Evaluation Model Scale. J Hum Health 2015;1:26-30 |

How to cite this URL:

Hashemian-Esfahani Ss, Najimi A, Zamani A, Shahir AaA, Geramian N, Golshiri P. Life Skills Training Programs and Empowerment of Students in Schools: Development, Reliability and Validity of Context, Input, Processes, and Product Evaluation Model Scale. J Hum Health [serial online] 2015 [cited 2018 Aug 21];1:26-30. Available from: http://www.jhhjournal.org/text.asp?2015/1/1/26/162529 |

| Introduction | |  |

Due to the changes, the ever-increasing complexity of societies and expanding social relations, preparing people to deal with difficult situations seems necessary. Hence, psychologists for the prevention of psychological and social disorder have proposed life skills education in schools. [1] The training aims to increase psychic-social abilities of children and adolescents to adaptive deal with the needs, problems, and difficulties of life. [2] Life Skills program is based on this principle that children and adolescents need and have a right to defend themselves and their demands against the difficult situations of life. [3]

The term "life skills" would be applied to wide range of psycho-social and interpersonal skills that can help people to make their own decision intelligently, communicate effectively, coping skills, develop their personal management, and have healthy and productive life. [4],[5] As a result, people would be able to accept responsibility for their social role and face to daily life problems without any harm to themselves or others. [6],[7]

So far, the life skills training program have been implemented in various countries, and its success have been proven. Even in some developing countries, life skills training is a part of school programs. Iran is also recognizing the importance and necessity of implementing the life skills program, has taken significant actions in this field. [8],[9] Although many projects in the field of life skills training for students have been performed in Iran, but it can be safely stated that the majority of them have been left without any evaluation and assessment of results. Evaluation means a dynamic research about the features and benefits of the program, as well as examines the effectiveness and efficiency of the conducted projects. [10],[11]

Due to the diversity of life skills training programs, offering one model for the evaluation of the program is not easy. While the multi-faceted nature of the evaluation, according to the large number of major factors and challenges, caused the wide complexity associated with the evaluation process. [12] Hence, this issue shows the need of use and application of a specific model coordinated with programming model for evaluation. Fitzpatrick et al. classifies evaluation approaches in six groups including objectives oriented, management-oriented, consumer-oriented, expertise-oriented, adversary-oriented, and participant oriented approaches. [13] In education, management-oriented evaluation approach as one of the important approaches provides necessary information for managers regarding to program execution. Stufflebeam model among management-oriented approach is collected and presented to facilitate managers' decision making and as a holistic and comprehensive model can consider a program systematically and multilaterally. This evaluation model is known as a context, input, processes, and product evaluation model (CIPP evaluation model). [14]

The objectives of the CIPP evaluation model include Growth and development of programs, create and provide useful information, helping people to judge and improve the value of multiple training programs, and contribute to the growth and development of policies of programs. One of the strengths of the CIPP model is providing a useful and simple tool for helping evaluators to answer questions about the process and results of program moreover, evaluators are able to determine multitude of questions in each stage of the CIPP model. [15] With regard to the evaluation gap in life skills training program and also comprehensive and flexible framework of CIPP evaluation model, this study aimed to create and develop a tool for evaluating the life skills training program based on the CIPP model was conducted.

| Methods | |  |

Participants

The study population further consisted of teachers, school administrators and training counselors of education departments, and regions implementing of life skills training program. Life skills training program were conducted in Isfahan province in 2012 with the purpose of Increasing mental health of students, their families and school personnel and improving the educational status of students, pathology of educational problems of student and planning to solve them, reducing the prevalence of social harms and making positive changes in knowledge, attitudes, and behaviors of students. All persons covered by the program (teachers, school administrators, and training counselors) were studied at the present study by census method.

Scale

The study scale was prepared as a researcher designed considering the objectives of the program based on the CIPP evaluation model checklists. [16] It was tried to use writing fluently and proper phraseology in compiling of expressions. In this regard, the opinions of 2 experts in Persian literature were used. The questions in the scale were based on the 5-point Likert scale: 1 - strongly agree, 2 - agree, 3 - no idea, 4 - disagree, 5 - totally disagree. Ratings from 1 to 5 depending on the options were granted to any.

Face validity

Face validity was conducted in two steps: Qualitative and quantitative. In the qualitative step, face validity, relevant items, ambiguity and insufficient understanding, and the difficulty of understanding were considered by a panel of experts (4 education specialists, 5 health promotion specialists, and 2 social medicine experts). At this step, the researcher used opinions of experts to correct items in each of the mentioned cases.

In the quantitative step, Impact Score was calculated. At this stage experts were asked to classify each of the items of the questionnaire according to a range of 5 Likert scale in terms of Importance, from quite important (score 5) to never important (score 1). If the impact coefficient was more than 1.5 points for each question, the items were found suitable for further analysis and were saved. [17],[18] In addition, 5 persons selected from the target group presented their views about Simplicity and intelligibility of the scale.

Content validity

For this study needed aspect of the content validity included the following: [19]

- All items should refer to important aspects and the main concept of measuring scale

- All items should be reflective of the studied population

- All items should be reflective of the purpose of the measuring instrument.

To evaluate the content validity, qualitative, and quantitative methods were used. [20] In quantitative methods, the content validity rate (CVR) and the content validity index (CVI) were determined. To examine the CVR, the scale was given to 4 education specialist and 4 health education specialist; the answers were designed based on a three-point Likert scale consisting of: Necessary, helpful but not necessary, and not necessary. To examine the CVI, the views of 15 experts in the specialty related to the field of the study were used. The indexes of "relevance," "clarity," and "simplicity" examined the questions of the scale based on 4-point Likert scale. [21]

In a qualitative method, some of the experts were interviewed, and they were asked to provide their adjusting views regarding to grammar, use appropriate and understandable words, proper scoring, time of completing the designed scale, proportion the selected dimensions and placement of items in their right place. After collecting the opinions of experts, the researcher attempted to prepare the final version of the scale, according to the obtained data.

Construct validity

To investigate the factor structure of the scale and following to the similar studies related to scale of CIPP evaluation model, exploratory factor analysis with principal components analysis and orthogonal rotation (Varimax rotation) and confirmatory factor analysis were used. Kaiser-Meyer-Olkin (KMO) statistic and Bartlett test, to evaluate the adequacy of the sample size and the homogenous were used. [22] In this study, the turning point of 4.0 was considered as the minimum factor loading required for each phrase extracted from the analysis factors.

Reliability

To determine of the reliability, the internal consistency method was used. To measure the internal consistency, Cronbach's alpha coefficient was used. Cronbach's alpha represents the proportion of a group of items that measure the structure. Acceptable internal consistency in this study was considered as the rate of more than 0.7.

The questionnaires were completed within 1 month as a group and individually, in some cases. Research data were analyzed using SPSS version 21 (SPSS Inc., Chicago, IL, USA) and AMOS 21 and descriptive statistics, Cronbach's alpha coefficients and Pearson Correlation Coefficient KMO, Bartlett, and orthogonal rotation.

| Results | |  |

The final numbers of participants in this study were 234 including 203 women (86%) and 31 males (14%). The age range of participants was between 29 and 57 years. Face validity by calculating the Impact Score for each item indicates that all items of the scale appropriated the Impact Score of more than 1.5.

Of the total 43 items assessed on the content validity based on the opinions of experts, 5 items were eliminated due to Score <0.79. The average index for the validity of scale was 0.84.

Before conducting exploratory factor analysis, KMO test was done to measure the sampling adequacy. The criterion of KMO was 0.81, indicates that the data are suitable for principal components analysis. Similarly, Bartlett test was significant (P < 0.001; 4494.15), which indicates that there is an insufficient correlation between variables in order to analyze.

Exploratory factor analysis was done on 38 items. In evaluating the results, items were collected in nine factors with a special value greater than one, however these factors could not be given any meaningful labeling. Considering that appropriateness of the four-factor structure has been confirmed by the other similar studies, exploratory factor analysis with Varimax rotation were repeated to investigate the construct validity by limiting factors to 4 [Figure 1].

Four collecting items with factor loadings <4.0, or under two different factors with high factor loading, were removed from the scale. After removing the mentioned items from the scale, KMO test was performed, and the results indicated the value 0.94.

The results of the factor analysis for created scale, in four factors by 34-item, are showed on [Table 1]. Factor loading was on the range of 0.45-0.78. Four factors explain a total of 61% of the total variance.

Based on the existing items in each factor, Factor 1 was named the product evaluation that totally explained 24% of the variance and has 10 items and also Factor 2, input evaluation, with 15% of the variance and including 9 items, Factor 3 process evaluation with 11% of the variance and including 9 items and finally 4 th factor was named context evaluation, explained 11% of the variance having 6 items [Table 1].

Factors validity test of indicator variables that constitute the scale was performed by confirmatory factor analysis. The amount of χ2 = 6.583 and P = 0.32 indicating that there is an acceptable relation between proposed model and the observed data. The comparative fit index and normed fit index are respectively, 0.971 and 0.964, which represents an ideal fitting of the model. The root-mean-squared error of approximation = 0.79, indicating the average fitting.

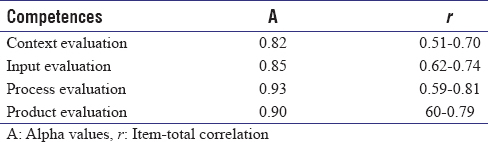

Reliability of the scale was calculated after factor analysis by Cronbach's alpha for the total scale and also for each factor. The reliability coefficient was calculated 0.87 for the scale and 0.82-0.93 for four factors. Internal consistency of each factor and the correlation coefficient of each question are shown in [Table 2]. | Table 2: Cronbach's alpha reliability coefficients and item-total correlations of the components

Click here to view |

| Discussion | |  |

In this study, Stufflebeam model (CIPP model) was used to create evaluation scale for the life skills training program and empowerment of students. From the reasons for the application of the model can be mentioned as a flexible framework and being performable besides the different types of evaluation, including CIPP evaluation. [23],[24],[25]

In context evaluation, in addition to trying to evaluate and determine the relevant elements in the educational environment, problems, needs, and opportunities present in the context or educational status are Identified. To evaluate the results of this stage help to decide on designing. [26],[27] Input evaluation searches ways and methods to reform and improve guidelines and strategies. [27] The objective of input evaluation was to find answers to the questions such as: How much the visual, audial, and written materials are used in the program, causes facilitating the learning process and attracting to the content of the program? Have these materials had a positive effect on students' life skills? Data collected duration of this phase of the evaluation should help decision makers to choice the best possible strategies and resources despite the specific limitations.

The process evaluation includes collecting of evaluation data that can be achieved when the program is designed and implemented. [28] The researcher, in process evaluation, attempts to answer questions such as the following: Whether the program has been implemented well? What are the barriers to its success? Were the number of counselors and educators enough? Was the time needed for training allotted to it? Were assignments and activities for supporting the training provided?

The answers to these questions will help to control and guide performing methods. The method of implementing the process evaluation includes monitoring implementation of activities and collecting data related to decisions duration of performing these activities. The result of the process evaluation is used to modify the implementing program and to provide a basis for interpreting the results that will be achieved in the future. [29]

The objective of product evaluation is measurement, interpretation, and judgment on the results of the program. In other words, the valuation of the product is for connecting the product and consequences of the program with the factors related to context, input and process of this system. Product evaluation is performed in order to continue training activities, cut them, modify or change some of the considering aspects. [30]

In the product evaluation some of the questions is answered such as the rate of changes in knowledge and attitudes of students and program executors and also the acquired skills (good listening, teamwork, problem solving, positive selection) by students.

The main limitation of this study is a lack of cooperation and accompanying of administrators and planners because of concerning possible results. Hence, we try to identify all key people and make all the process of evaluating program clear.

Finally, the results of this study can be interesting to researchers, educators, and planners in life skills. This tool can be used as an efficient tool to evaluate the life skills training program. Moreover, it can create a new perspective on assessment and evaluation of life skills training program.

Acknowledgment

This study was granted by Isfahan University of Medical Sciences, Isfahan, Iran. This was an MD thesis approved in the school of medicine, Isfahan University of Medical Sciences (thesis no: 393630).

Financial support and sponsorship

Isfahan University of Medical Sciences, Isfahan, Iran.

Conflicts of interest

There are no conflicts of interest.

| References | |  |

| 1. | Camiré M, Trudel P, Forneris T. High school athletes′ perspectives on support, communication, negotiation and life skill development. Qual Res Sport Exerc 2009;1:72-88.  |

| 2. | Gould D, Collins K, Lauer L, Chung Y. Coaching life skills through football: A study of award winning high school coaches. J Appl Sport Psychol 2007;19:16-37.  |

| 3. | Seal N. Preventing tobacco and drug use among Thai high school students through life skills training. Nurs Health Sci 2006;8:164-8.  |

| 4. | Jones MI, Lavallee D. Exploring the life skills needs of British adolescent athletes. Psychol Sport Exerc 2009;10:159-67.  |

| 5. | Unicef. The State of the World′s Children 2004-Girls, Education and Development. New York: Unicef; 2000.  |

| 6. | Helfrich CA, Aviles AM, Badiani C, Walens D, Sabol P. Life skill interventions with homeless youth, domestic violence victims and adults with mental illness. Occup Ther Health Care 2006;20:189-207.  |

| 7. | Choueiri LS, Mhanna S. The Design Process as a Life Skill. Procedia Soc Behav Sci 2013;93:925-9.  |

| 8. | Rahmati B, Adibrad N, Tahmasian K. The effectiveness of life skill training on social adjustment in children. Procedia Soc Behav Sci 2010;5:870-4.  |

| 9. | Moinalghorabaei M, Sanati M. Evaluation of the effectiveness of life skills training for Iranian working women. Iran J Psychiatry Behav Sci 2008;2:23-9.  |

| 10. | Vajargah KF, Abolghasemi M, Sabzian F. The place of life skills education in Iranian Primary School Curricula. World Appl Sci J 2009;7:432-9.  |

| 11. | Fink A. Evaluation for Education and Psychology. California: Sage Publications, Inc.; 1995.  |

| 12. | Stern MJ, Powell RB, Hill D. Environmental education program evaluation in the new millennium: What do we measure and what have we learned? Environmental Education Research. 2014;20:581-611.  |

| 13. | Fitzpatrick JL, Sanders JR, Worthen BR. Program Evaluation: Alternative Approaches and Practical Guidelines. New York: Pearson Education; 2004.  |

| 14. | Zhang G, Zeller N, Griffith R, Metcalf D, Williams J, Shea C, et al. Using the context, input, process, and product evaluation model (CIPP) as a comprehensive framework to guide the planning, implementation, and assessment of service-learning programs. J High Educ Outreach Engagem 2011;15:57-84.  |

| 15. | Stufflebeam DL. The CIPP model for evaluation. Evaluation models.Netherlands: Springer: Springer; 2000. p. 279-317.  |

| 16. | Stuffl ebeam DL. CIPP evaluation model checklist. Western Michigan University The Evaluation Centre Retrieved June, 2007;2:2009.  |

| 17. | Kolagari S, Zagheri Tafreshi M, Rassouli M, Kavousi A. Psychometric evaluation of the role strain scale: The Persian version. Iran Red Crescent Med J 2014;16:e15469.  |

| 18. | Lacasse Y, Godbout C, Sériès F. Health-related quality of life in obstructive sleep apnoea. Eur Respir J 2002;19:499-503.  |

| 19. | Yaghmaie F. Content validity and its estimation. J Med Educ 2003;3:25-7.  |

| 20. | Polit DF, Beck CT. The content validity index: Are you sure you know what′s being reported? Critique and recommendations. Res Nurs Health 2006;29:489-97.  |

| 21. | Lawshe CH. A quantitative approach to content validity. Pers Psychol 1975;28:563-75.  |

| 22. | Weaver B, Maxwell H. Exploratory factor analysis and reliability analysis with missing data: A simple method for SPSS users. Quant Methods Psychol 2014;10:143-52.  |

| 23. | Ho WW, Chen WJ, Ho CK, Lee MB, Chen CC, Chou FH. Evaluation of the suicide prevention program in Kaohsiung City, Taiwan, using the CIPP evaluation model. Community Ment Health J 2011;47:542-50.  |

| 24. | Al-Khathami AD. Evaluation of Saudi family medicine training program: The application of CIPP evaluation format. Med Teach 2012;34 Suppl 1:S81-9.  |

| 25. | Shams B, Golshiri P, Najimi A. The evaluation of Mothers′ participation project in children′s growth and development process: Using the CIPP evaluation model. J Educ Health Promot 2013;2:21.  |

| 26. | Park HY. Evaluation of Airline Service Education Using the CIPP Model-focus on factors which influenced satisfaction and recommendation of the training program. J Korea Contents Assoc 2012;12:510-23.  |

| 27. | Stufflebeam DL, Shinkfield AJ. Evaluation Theory, Models, and Applications. San Francisco: Jossey-Bass; 2007.  |

| 28. | Coryn CL, Hattie JA. The trans disciplinary model of evaluation. J Multidiscip Eval 2007;3:107-14.  |

| 29. | Tseng KH, Diez CR, Lou SJ, Tsai HL, Tsai TS. Using the context, input, process and product model to assess an engineering curriculum. World Trans Eng Technol Educ 2010;8:256-61.  |

| 30. | Steinert Y, Cruess S, Cruess R, Snell L. Faculty development for teaching and evaluating professionalism: From programme design to curriculum change. Med Educ 2005;39:127-36.  |

[Figure 1]

[Table 1], [Table 2]

|